Do keep this limitation in mind if you’re thinking about populating all four USB 3.0 ports with high-speed storage with the intent of building a low-cost Thunderbolt alternative. A single PCIe 2.0 lane offers a maximum of 500MB/s of bandwidth in either direction (1GB/s aggregate), which is enough for the real world max transfer rates over USB 3.0. I believe it’s the FL1100, which is a PCIe 2.0 to 4-port USB 3.0 controller. The 8th PCIe lane off of the PCH is used by a Fresco Logic USB 3.0 controller.

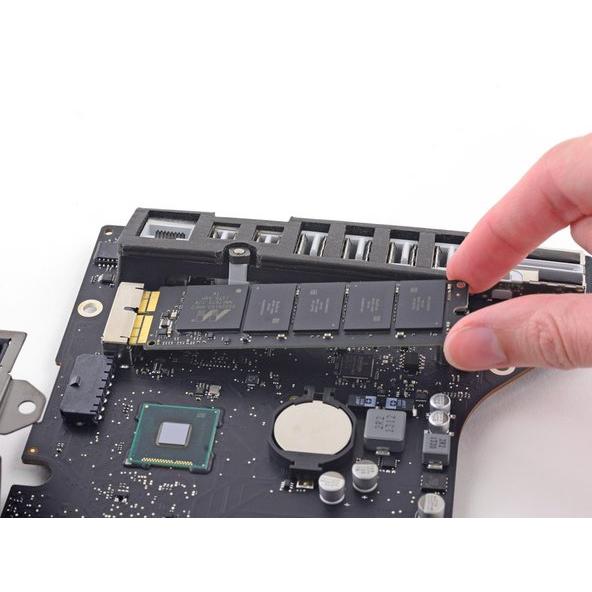

That’s right, it’s nearly 2014 and Intel is shipping a flagship platform without USB 3.0 support. Here we really get to see how much of a mess Intel’s workstation chipset lineup is: the C600/X79 PCH doesn’t natively support USB 3.0. That leaves a single PCIe lane unaccounted for in the Mac Pro. All Mac Pros ship with a PCIe x4 SSD, and those four lanes also come off the PCH. Here each Gigabit Ethernet port gets a dedicated PCIe 2.0 x1 lane, the same goes for the 802.11ac controller.

I wanted to figure out how these PCIe lanes were used by the Mac Pro, so I set out to map everything out as best as I could without taking apart the system (alas, Apple tends to frown upon that sort of behavior when it comes to review samples). The PCH also has another 8 PCIe 2.0 lanes, just like in the conventional desktop case. That’s enough for each GPU in a dual-GPU setup to get a full 16 lanes, and to have another 8 left over for high-bandwidth use. Here the CPU has a total of 40 PCIe 3.0 lanes. Ivy Bridge E/EP on the other hand doubles the total number of PCIe lanes compared to Intel’s standard desktop platform: The 8 remaining lanes are typically more than enough for networking and extra storage controllers. In a dual-GPU configuration those 16 PCIe 3.0 lanes are typically divided into an 8 + 8 configuration. You’ve got a total of 16 PCIe 3.0 lanes that branch off the CPU, and then (at most) another 8 PCIe 2.0 lanes hanging off of the Platform Controller Hub (PCH). Here’s what a conventional desktop Haswell platform looks like in terms of PCIe lanes: The second point is a connectivity argument. Even though each of those cores is faster than what you get with an Ivy Bridge EP, for applications that can spawn more than 4 CPU intensive threads you’re better off taking the IPC/single threaded hit and going with an older architecture that supports more cores. a conventional desktop Haswell for the Mac Pro and you’ll get two responses: core count and PCIe lanes. Ask anyone at Apple why they need Ivy Bridge EP vs.

0 kommentar(er)

0 kommentar(er)